Listen to this article. Also available on Spotify. Subscribe to PolyAPI Squawk.

We are on the cusp of realizing our vision for AI-powered services in enterprises. In our view, AI services function as microservices without executable code. Instead, an AI model performs tasks traditionally handled by human-written (or AI-generated) code. Bringing this to life will disrupt the entire backend application development paradigm.

Based on my experience, this paradigm could replace approximately 50%-70% of services representing the long tail of load. These services account for around 20% of the overall load. Many receive hundreds or thousands of invocations daily rather than millions or billions. Due to the relatively high cost and latency of AI, high-throughput and latency-sensitive services will not be disrupted yet. However, many low-volume, non-latency-sensitive services are ready for transformation today.

Challenges to Mass Adoption

Five major hurdles must be overcome before widespread adoption occurs. Once adoption begins, it will progress like a rising tidal wave. However, a mass extinction event in the enterprise space is unlikely. Enterprises have more IT infrastructure than they can systematically replace within a decade or more. The transition cost—budget constraints, labor limitations, risk appetite, and management capacity—will dictate the pace. As a result, AI services will steadily integrate into enterprises rather than rapidly replacing existing systems.

The five key hurdles are:

- Existing Services – The cost of removing, porting data, re-training, etc., will be a significant obstacle.

- Trust in AI Models and Providers – Enterprises must become comfortable with core data, including customer transactions, payments, and accounting, processed by AI models hosted by trusted providers.

- Understanding AI Capabilities – Many enterprise application creators lack clarity on when to use traditional code versus AI services.

- Limited Exposure to AI-powered UIs – AI is often perceived as merely chatbot-driven, but it can operate behind the scenes, enhancing traditional UI experiences.

- Enterprise Packaging – AI service platforms, including Poly, don’t provide a comprehensive “whole product” experience.

How AI Services Differ from Traditional Services

Beyond eliminating the need for written and maintained code, AI services excel at performing fuzzy tasks that are difficult to program. This makes them particularly valuable in ambiguous use cases.

Examples of AI-powered intelligent search:

- Searching for “John from Portugal” to find a customer record.

- Looking up “People who attended the rock concert on Feb 19th.”

- Finding “Bookings of suites where the special request is for beverage services.”

- Retrieving “Journal entries related to the maintenance of our facility.”

AI services reduce development and maintenance costs and enable more powerful user experiences. The interface does not need to be a chatbot; a traditional UI with streamlined search can function as a prompt-driven system. Results can be structured for clarity, enabling further enhancements such as presenting graphs or offering actions like downloading or emailing reports. There are too many cases to list here where AI-powered UIs are a better fit than a chat or voice experience.

Realizing This Vision

The biggest challenge in executing this vision is building a platform that meets enterprise needs while supporting both AI-powered and traditional integrations. Poly is uniquely positioned to deliver this by enabling hybrid integrations, where some endpoints operate traditionally while others leverage AI—or even a combination of both.

We recognized this shift in early 2023 when ChatGPT plugins were announced and have since been laying the groundwork to support both the conventional development and AI-powered approaches. Traditional middleware platforms face significant hurdles in adapting, as they must either overhaul existing systems or build next-generation platforms, which introduces high switching costs for customers. New platforms that only focus on the AI approach are not appealing to enterprises as they don’t provide a solution to their whole set of needs.

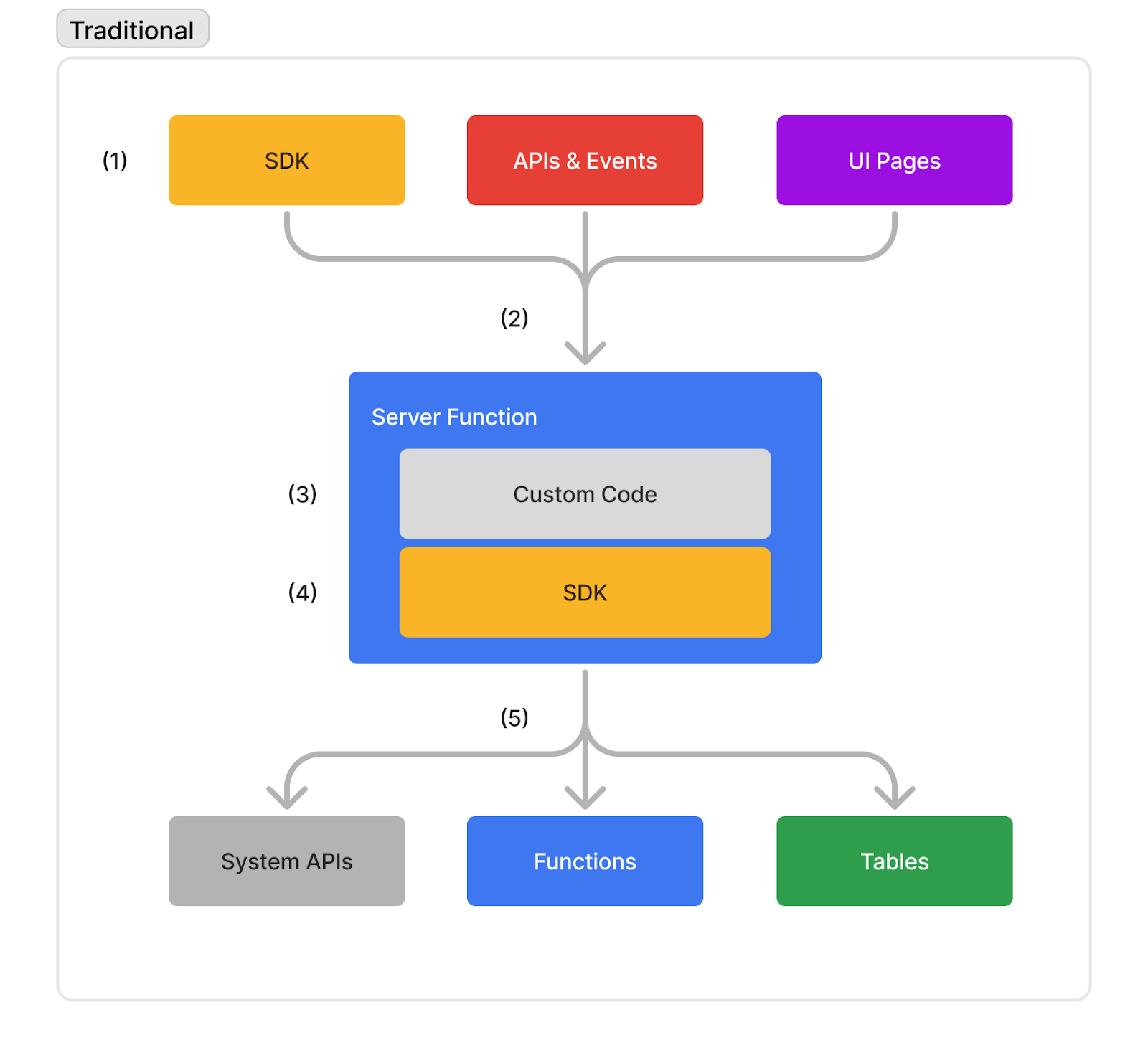

Our key innovation lies in encapsulating AI services in the same “function” model as other Poly primitives, which offers consistent alternatives. Today, functions in Poly work like this:

1. Invocation Methods – Functions can be invoked in three ways:

- SDKs embedded in client applications (TypeScript, Python, Java, C#).

- Triggers from webhooks (API events, errors), with future support for native Kafka, GraphQL subscriptions, etc.

- Canopy UI configurations tied to UI elements like page loads and button clicks.

2. Strongly Typed Interfaces – Functions support strongly typed interfaces, defined directly or via schemas.

3. Flexible Implementation – Developers implement function logic in their preferred language and can import external libraries as needed.

4. Unified Poly SDK – The generated SDK enables seamless, secure interaction with underlying enterprise systems, other Poly Functions, and Poly Tables.

5. Cataloged & Controlled Interactions – Underlying system APIs, Poly Functions (server functions or AI services), and Poly Tables are strongly typed and cataloged. All interactions pass through an outbound gateway (not shown) for observability and control.

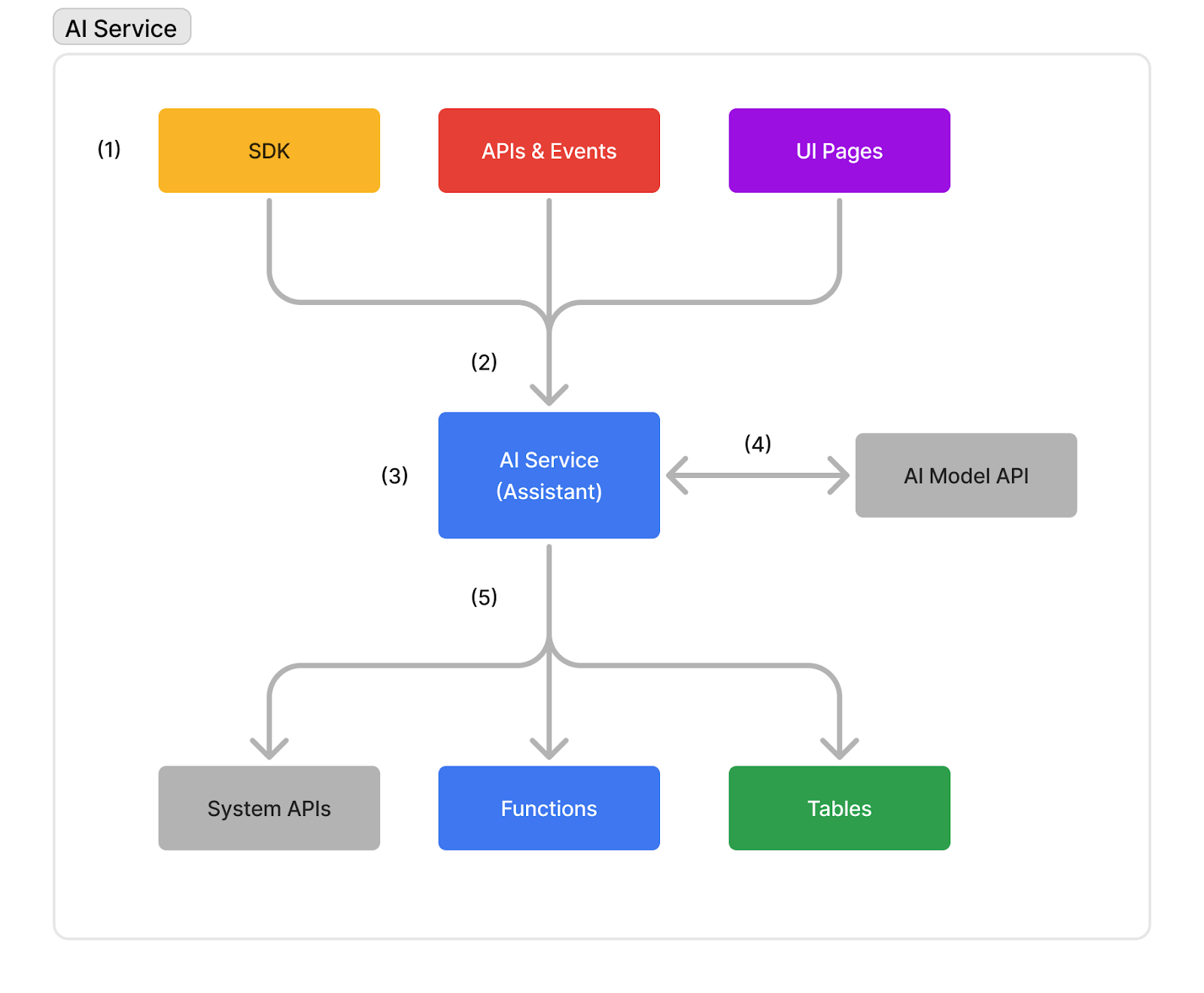

AI-Powered Functions

AI services will share many capabilities with traditional functions but differ in key ways:

1. Invocation Methods (Same) – AI Services are modeled as functions that share the same context, and invocation methods are used as server functions, offering consumers maximum flexibility.

2. Strongly Typed Interfaces (Same) – AI assistants conform to a model, ensuring predictable and structured upstream consumption.

3. Encapsulated AI Services (Different) – There is no implementation; the service is configured to interact with an AI model. The service, not the AI model, invokes API calls—ensuring the AI model has no direct access to credentials or underlying systems.

4. Model Support & API Execution (Different) – Poly will support multiple AI models if they can handle function calling. Because Poly’s outbound gateway effectively provides an API for any underlying system, including REST, SOAP, other Poly functions or AI services, and Poly tables, the AI simply specifies the next call without being responsible for executing it. This unified execution framework ensures the AI can orchestrate workflows across different systems without dealing with provider-specific complexities, creating a consistent and efficient way to operate as if everything were in a cohesive environment.

5. Cataloged & Controlled Interactions (Same) – Like server functions, AI Services can invoke underlying server functions, APIs, or tables, each adhering to its schemas and strongly typed models.

Moving Forward

Although we have the necessary building blocks, we recognize that additional refinements are needed to make the platform seamless and elegant. We are exploring new opportunities to accelerate realizing this vision while supporting our existing customers and sustaining our continued growth in enterprise adoption. We’ll keep you updated as developments unfold.

Want to learn more about Poly? Reach out to us for a demo!